Hi all,

This is a little different than other label-switching questions I’ve found here, and it’s not really covered by Betancourt’s excellent vignette. Sorry for the long post. The quick summary is that I’m facing label-switching, which isn’t a problem for my application except that it messes up diagnostics such as R-hat and ESS. Correcting for label-switching (post-hoc relabeling) makes the diagnostics much better, but I still seem to need long-ish runs (8 chains, 4000 warmup, 5000 sampling so far) to make the corrected diagnostics closer to the recommended values (still not there yet), perhaps indicating deeper issues with sampling.

More detail:

I am using a fully exchangeable mixture of 6 multivariate Gaussians as a prior on hierarchical regression coefficients (vectors length 3). I don’t mind label-switching itself in my MCMC chains, since I’m not trying to make (unidentifiable) inferences about specific mixture components. And if I use careful priors, cmdstanr’s HMC sampling runs fairly quickly without divergences, so I don’t think it’s crazy to fit 6 multivariate components. But the R-hat and ESS automatically produced by cmdstanr look terrible (see below; these are the estimated mixture weights \pi).

Now, we know that label-switching, or more precisely exchangeability, can fool naive R-hat: e.g. R-hat doesn’t know that a chain sampling near mode (\theta_1 = 0, \theta_2 = 1) is actually equivalent to that chain sampling near mode (\theta_1 = 1, \theta_2 = 0) if these parameters are exchangeable. So it can look like two chains haven’t mixed, or one chain isn’t stationary (e.g. first half of the chain appears to be sampling a different mode than the second half) — when actually all is well. That is what I’m hoping is causing most of the problem.

So currently I’ve been doing the very simplest post-hoc relabeling (there’s a whole literature on this that I’ve been trying desperately to ignore). After sampling, within each chain, for each iteration/draw, I (arbitrarily) re-order the mixture indices by the size of the mixture weight (i.e. I re-order so that mixture weights \pi_1 < \pi_2 < \dots). Exchangeability says this is valid. And R-hat and ESS do look dramatically better for the variables that have had their indices re-ordered.

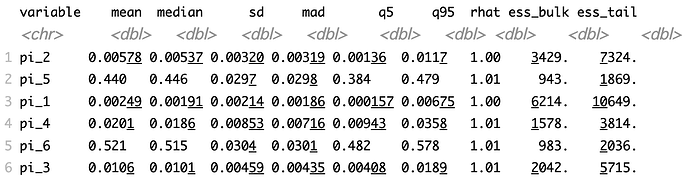

After:

But R-hat and ESS vary a lot between mixture components, and there’s a slightly fishy pattern that after re-ordering the larger mixture weights tend to have much worse diagnostics, not sure if that’s indicative of something.

So I’m looking for an explanation and a better solution. One obvious possibility is to change the model so that the mixture weights are an ordered simplex. But there seems to be confusion in the Stan forums about whether that’s slow or unstable or plain dumb, and I don’t have a good intuition about sensible ordered simplex priors (or what it would mean to declare an ordered Dirichlet). And the other symmetry-breaking schemes don’t play nicely with my multivariate model (and frankly look like pretty weird priors).