Hello,

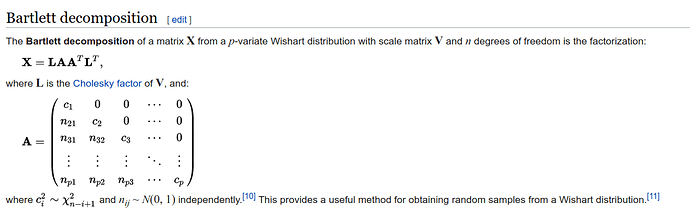

I have a spatial population genetic clustering method in which samples are assigned to, or admixed between, a user-specified number of discrete groups within which relatedness decays with distance. Each group is modeled as a spatial Gaussian process, so the full model is a mixture of spatial Gaussian processes. The data can consist of between tens of thousands to millions of independent observations, so, for efficiency, I summarize with the observed covariance between samples across observations, and model that covariance as a draw from a Wishart with a parametric scale matrix (detailed in the model block below). Implemented in STAN, the model works great for a smallish number of samples (fewer than ~100), but it’s quite slow for larger datasets.

I have two questions:

-

Are there any low-hanging fruit speedups that you can see, either in reworking the syntax or reparameterizations? I know the STAN crew is generally anti-Wishart as a prior, but, because there are so many observations (potentially into the millions), I’m think that the Wishart is more efficient than the multivariate Gaussian for calculating the likelihood.

-

Are there ways to implement rank-one updates to the inverse of the Wishart’s parametric scale matrix in STAN? For some parameters, if I were to update only that parameter, I could use the Sherman-Morrison-Woodbury trick to update a single row/column of the inverse matrix. I know the point of STAN’s sampler is to move in many directions at once in parameter space, but I’m curious if there’s any way to work in this kind of efficiency (or if it’s already happening under the hood).

Thanks, I appreciate the feedback!

functions {

matrix spCov(int N, real aD, matrix D, real mu){

matrix[N,N] cov;

for(i in 1:N){

for(j in i:N){

cov[i,j] = exp( -(aD* D[i,j])) + mu;

cov[j,i] = cov[i,j];

}

}

return cov;

}

matrix admixed_covariance(int N, int K, vector alphaD, matrix geoDist, matrix w_mat, vector nugget, vector mu, real gamma) {

matrix[N,N] parCov;

matrix[N,N] Nug_mat;

parCov = rep_matrix(0,N,N);

Nug_mat = diag_matrix(nugget);

for(k in 1:K){

parCov = parCov + tcrossprod(to_matrix(w_mat[,k])) .* spCov(N,alphaD[k],,geoDist,mu[k]);

}

parCov = gamma + parCov + Nug_mat;

return parCov;

}

matrix make_w_matrix(int N, int K, vector[] w){

matrix[N,K] w_mat;

for(i in 1:N){

w_mat[i] = to_row_vector(w[i]);

}

return w_mat;

}

}

data {

int<lower=1> K; // number of layers

int<lower=2> N; // number of samples

int<lower=N+1> L; // number of loci

matrix[N,N] obsCov; // observed sample covariance

matrix[N, N] geoDist; // matrix of pairwise geographic distance

real varMeanFreqs; // variance in mean allele frequencies across loci

real<lower=0,upper=1> temp; // temperature parameter for estimating marginal likelihood

}

parameters {

vector<lower=0>[K] alphaD; // effect of geographic distance in the parametric covariance in layer k

positive_ordered[K] mu; // shared drift effect in layer k

vector<lower=0>[N] nugget; // sample-specific variance (allele sampling error + sample-specific drift)

simplex[K] w[N]; // every sample (N in total) has a K simplex (i.e. K layers)

real<lower=0> gamma; // baseline covariance between all layers

}

transformed parameters {

matrix[N,N] parCov; // this specifies the parametric, admixed covariance matrix

vector[K] dirConPar; // concentration parameters of dirichlet prior on w

matrix[N,K] w_mat; // converting w simplex to matrix for indexing

dirConPar = rep_vector(0.1,K);

w_mat = make_w_matrix(N,K,w);

parCov = admixed_covariance(N, K, alphaD, geoDist, w_mat, nugget, mu, gamma);

}

model {

alphaD ~ normal(0,1); // prior on alphaD

nugget ~ normal(0,1); // prior on nugget

mu ~ normal(0,1); // prior on nugget

gamma ~ normal(varMeanFreqs,0.5); // prior on nugget

for(i in 1:N) w[i] ~ dirichlet(dirConPar); // prior on admixture proportions

(L*obsCov) ~ wishart(L,parCov); // likelihood function

}