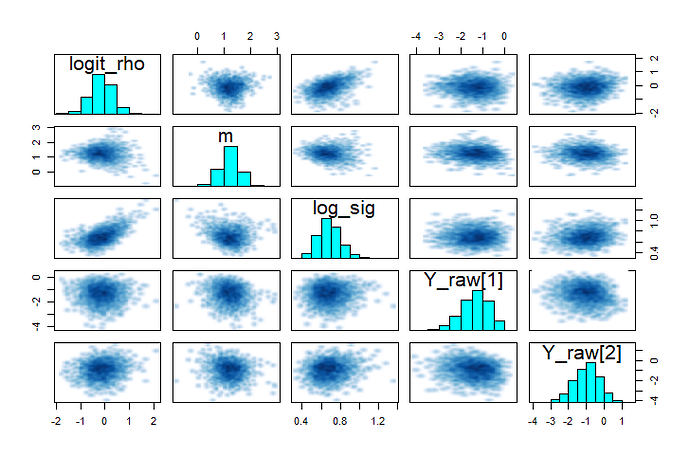

I’m working on a model with fairly many parameters (>5000) and is approaching 1000 lines of code, so I won’t attempt to get input on the entire thing, but I believe I have found some candidate parameters that might be causing my current problems with divergence, plotted as pairs below. The analysis is temporal, and each time iy has a parameter u[t], which is the autocorrelated temporal effect on “the thing this is modeling.” There are currently 11 time steps (NrOfYears) in the model.

logSu is the logarithm of standard deviation, Su, of u

logit_rhou is the logit-transform of first order autocorrelation rhou (I have for now excluded negative AC)

umean is the average parameter that u are distributed around

u_raw[1],u_raw[2] are examples the transformed parameters u on which they are updated.

I’m pasting in the code involving these parameters below in the hope that it might be sufficient to understand how they are modeled. One apparent issue is the funnel behavior umean. If that occur for the effects x around the mean, it often works to x[i]=x_raw[i] * sigma and x_raw[i] ~ normal(0, 1), but here it’s the mean that has a funnel behavior. Any idea of how to transform meanu to escape the funnel? I also think the correlation between logSu and `logit_rhou could be problematic.

In the plot, the blob of red dots are all from the same chain where almost all iterations diverged.

Grateful for any input.

Transformed parameters

rhou=inv_logit(logit_rhou);

Su=exp(logSu);

for (iy in 1:NrOfYears){

if (iy == 1){

u[iy]=u_raw[iy] * Su;

}else{

u[iy] = rhou*u[iy-1] + u_raw[iy] * sqrt(1-rhou^2) *Su;

}

}

for (il in 1:NrOfLans){

for (iy in 1:NrOfYears){

loga[il,iy] = umean + u[iy]+v[il]; //the part of the model involving v appears to behave better

a[il,iy] = exp (loga[il,iy] );

}

}

Model

rhou~beta(1,1);

target += log_inv_logit(logit_rhou) + log1m_inv_logit(logit_rhou);

for (iy in 1:NrOfYears){

u_raw[iy] ~ normal(0, 1);

//

}

Su ~ cauchy(0,2.5);

target += logSu;