Thanks a lot for all your help.

I am trying to adapt my model such as I won’t have this problem.

One of the thing I was thinking of is allowing peak at every point but with a prior that is concentred at 0.

Initially I was thinking of doing a prior that is a mixture model but I don’t think it will work.

Really what I want is to have a heaviside step function with a dynamic “x” and when it is not 0 multiply it by a gamma prior.

I understand it is a bit hard to understand.

I am basically trying to fit something like that:

generate_data <- function(n_point=100,noise_mu=1000,noise_sigma=5,n_peak=5,coef_shape=5,coef_rate=1,tau_min=3,tau_max=10){

idx <- rbinom(n_peak,n_point, runif(n_peak,0,1))

noise <- rnorm(n_point,noise_mu,noise_sigma)

init_data <- noise

coef_delta <- rgamma(n_peak,coef_shape,coef_rate)

tau <- runif(n_peak,tau_min,tau_max)

data <- init_data

for (i in seq_along(idx)){

v <- idx[i]

delt <- coef_delta[i]

t <- tau[i]

data <- data + median(init_data) * delt * as.numeric(seq(1,n_point) >= v ) * c( rep(0,v-1), exp(-c(seq(0,n_point-v)/t )))

}

list(

data = data,

tau = tau,

delta = coef_delta,

peak_idx = idx,

noise = noise

)

}

Where any of the parameters from the function could change.

i.e.

generated <- generate_data()

data <- generated$data

plot(data,t="l")

The model I came up with is this one:

model.code <- "

data{

int<lower=1> T;

real y[T];

}

transformed data{

real<lower=0> median; //median of y

int middle; // idx of middle data

real y_sorted[T];

middle = T / 2;

y_sorted= sort_asc(y);

if (T % 2)

{

median = (y_sorted[middle] + y_sorted[middle + 1]) / 2.0;

}

else

{

median = y_sorted[middle ];

}

}

parameters {

real<lower=0> delta[T];

real<lower=0.0000001> tau[T];

real<lower=0> sigma;

}

transformed parameters {

vector[T] decay; // decay

for (i in 1:T){

decay[i] = 0;

for(j in 0:(i-1)){

decay[i] = decay[i] + median * delta[i-j] * exp(-j / tau[i-j]);

}

}

}

model{

real ps[T];

sigma ~ cauchy(0,5);

delta ~ exponential(1);

y ~ normal( decay , sigma);

}

generated quantities{

real ps_rep[T];

real y_rep[T];

for (i in 1:T){

ps_rep[i] = 0;

for(j in 0:(i-1)){

ps_rep[i] = ps_rep[i] + median * delta[i-j] * exp(-j / tau[i-j]);

}

}

for (nn in 1:T){

y_rep[nn] = ps_rep[nn] ;

}

}

"

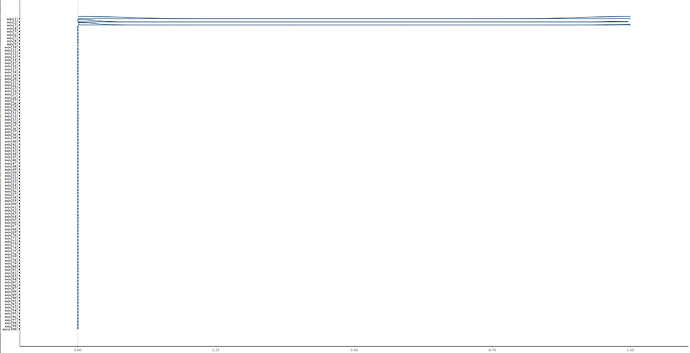

However, as you can see I am not really handling the delta as I should.

And I end up having my sampler not happy:

model.dat <- list(T=100,y=data)

stan.fit <- stan(model_code=model.code,

model_name="lm_decay",

data=model.dat)

There were 4000 divergent transitions after warmup. Increasing adapt_delta above 0.8 may help. See

http://mc-stan.org/misc/warnings.html#divergent-transitions-after-warmupThere were 3 chains where the estimated Bayesian Fraction of Missing Information was low. See

http://mc-stan.org/misc/warnings.html#bfmi-lowExamine the pairs() plot to diagnose sampling problems

More importantly the fit is just bad

library(bayesplot)

y_rep <- rstan::extract(stan.fit,"y_rep")

ppc_dens_overlay(y = data,

yrep = y_rep[[1]][1:100,])

Could someone advise me on how I could solve this or just give me a hint?