Hi all

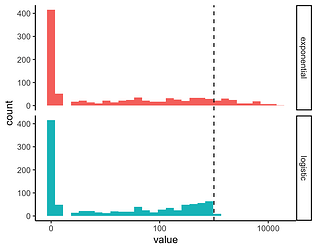

The observed outcome in my study is counts (specifically, bacterial counts), but the counts are distroted in an interesting way: some samples are older than others, making older samples have lots of bacteria (even if they started with a small amount) because there was time for them to grow exponentially. The people who collected the data could not stop some samples from getting old, but they could at least record the age of every sample, which gives me the opportunity to correct for this aging.

Briefly, for an initial bacterial sample i with count Y_{0}^{i}, the actual observed value after reaching age t_{i} is Y_{measured}^{i} = Y_{0}^{i} e^{r t_{i}}, where r is the growth rate of the bacteria, a parameter to be estimated. As is probably obvious to many of you, an initial count of 0 would stay 0, and our data does contain a lot of zeroes (~70%), but the non-zero portion would get stretched down the number line depending on how old every sample is.

Note that we’re interested in the values before the distortion due to growth: that is, I’m interested in modelling Y_{0}^{i} and estimate parameters (mean etc) for it, and not for the measured value, which is distorted. As such, I will need to estimate r as well. A statistical model based on Y_{0}^{i} following a poisson or negative binomial is fine, but we’re measuring Y_{measured}^{i} instead.

I have a feeling that doing the following is a statistical no no:

\frac{Y_{measured}^{i}}{e^{r t_{i}}} \sim \mathrel{NegBin(\mu,\phi)}

That is, dividing my outcome by the vector of expected growth due to age. But if in Stan I do this:

data{

int N;

vector[N] Ymeasured;

vector[N] t;

}

parameters{

vector[N] y0;

real<lower=0> r;

real<lower=0> phi;

...

}

transformed parameters{

vector[N] mu = y0*exp(r * t);

}

model{

Ymeasured ~ neg_binomial_2(mu,phi);

}

This is a problem too because Ymeasured itself is not expected to be distributed as a negative binomial, just because Y0 is, and even if it was, the overdispersion parameter phi would be different before and after aging.

I think if I change my model from a negative binomial to a poisson or a hurdle type, I will still have this same problem: after distortion, I’m not sure of the distribution anymore, but I have better ideas (and more interest in) the distribution of y0.

Any advice appreciated