I have encountered a modeling problem that I don’t fully understand while trying to model a generalized non-linear mixed model for item response theory. Normally, I run these models by treating the items as random, so the model would look like the following:

IRT_priors <- <-

prior("normal(0, 3)", class = "b", nlpar = "eta") +

prior("normal(0, 1)", class = "b", nlpar = "logalpha") +

prior("normal(0, 3)", class = "sd", group = "Item", nlpar = "eta") +

prior("normal(0, 1)", class = "sd", group = "Item", nlpar = "logalpha")

SEED <- 12345

TwoPL <- brm(bf(response ~ exp(logalpha) * eta,

eta ~ 1 + (1 |i| Item) + (1 | ID),

logalpha ~ 1 + (1 |i| Item),

nl = TRUE),

data = df, family = bernoulli("logit"), prior = IRT_priors,

iter = 3000, warmup = 1000, seed = SEED)

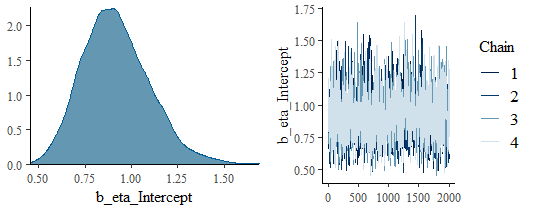

For the current particular need, however, I wanted to treat the items as fixed versus random. I initially ran the fixed items model with the intercept included, and the models ran perfectly fine. A co-author noted that I had left the intercept in and recommended that I remove it. I didn’t think that this would do anything to model since the first item on the test would just become the intercept; however, I started encountering some estimation problems after removing the intercept. Specifically, I get low Bulk ESS for my unidimensional models (corrected by simply increasing the iterations) but then non-convergences (R-hats as high as 1.84) and divergent transitions (up to ~150 with adapt_delta set to 0.80) for multidimensional models that fit without any need to adjust default settings when the intercept is included.

I assume that the greater autocorrelation in the chains and lower ESS is related to loss of centered parameterization when the intercept is removed, but the lack of convergence and divergent transitions made me think that perhaps the priors needed to be changed to account for the lack of intercept. I used the simpler intercept-only model to try to figure out what my prior specifications were doing via prior predictive checks as follows:

TwoPL_priors_fixed <-

prior("normal(0, 2)", class = "b", nlpar = "eta") +

prior("normal(0, 1.5)", class = "b", nlpar = "logalpha") +

prior("normal(0, 1)", class = "sd", group = "ID", nlpar = "eta")

TwoPL_noint <- brm(bf(Resp ~ exp(logalpha) * eta,

eta ~ 0 + Item + (1 | ID),

logalpha ~ 0 + Item,

nl = TRUE),

data = df_long, family = bernoulli("logit"),

prior = TwoPL_priors_fixed, seed = SEED, iter = 3000, warmup = 1000,

sample_prior = "only")

TwoPL_inter <- brm(bf(Resp ~ exp(logalpha) * eta,

eta ~ 1 + Item + (1 | ID),

logalpha ~ 1 + Item,

nl = TRUE),

data = df_long, family = bernoulli("logit"),

prior = TwoPL_priors_fixed, seed = SEED, iter = 3000, warmup = 1000,

sample_prior = "only")

pp_check(TwoPL_noint, type = "bars")

pp_check(TwoPL_inter, type = "bars")

NoInt_parameters <- posterior_sample(TwoPL_noint)

Inter_parameters <- posterior_sample(TwoPL_inter)

The following plot is the prior predictive check from the model with the intercept (just predicting the total number of incorrect/0 and correct/1 responses):

And this is the prior predictive check from the model without the intercept:

Clearly, the model without the intercept is not covering nearly the same range of predictions despite using the same priors. Since the seed between the two models is also the same, I checked whether my understanding about the intercept was correct (i.e., that with an intercept, the first item will just “become” the intercept):

all.equal(NoInt_parameters, Inter_parameters, check.attributes = FALSE)

[1] TRUE

The only difference in the posteriors is that, when there’s an intercept, the first item’s name is “Intercept” instead of “Item1.” I’m admittedly unsure of why that seems to be the case. When I extract the priors via prior_summary(), there is a b coefficient named “Intercept” in the intercept model; however, I’m more familiar with having to set a prior for the intercept as a specific class (e.g., prior("normal(0, 1)", class = "Intercept")), so I don’t know why in this case there is a coefficient for a renamed item. The fact that I get the very same estimates for all the model parameters sampling from the same priors on a model that differs only in whether an intercept is modeled or not makes sense, but I don’t understand why the priors seem to be so much more informative in the case of the no-intercept model if everything else is the same. I also don’t know whether that difference in prior behavior is producing the pathological model estimation when I drop the intercept, though I assume it is.